The Hidden Cost of Flaky Tests: How to Build Deterministic E2E Test Suites

Master the art of eliminating unreliable tests that pass sometimes, fail randomly, and destroy team confidence in automation

You've seen it before: a test passes on your machine, fails in CI, passes when you retry it, then fails again tomorrow morning. Your team starts ignoring test failures. Pull requests get merged with "known flaky test" comments. Before you know it, your entire test suite becomes a coin flip instead of a safety net.

Flaky tests are more than annoying—they're expensive. Teams waste hours debugging false positives, developers lose trust in automation, and worst of all, real bugs slip through when everyone assumes failures are "just flakiness." Today, we're diving deep into the root causes of test flakiness and the architectural patterns that eliminate it permanently.

The Four Horsemen of Test Flakiness

After analyzing thousands of flaky tests across Playwright, Cypress, and Selenium codebases, four patterns emerge repeatedly:

1. Race Conditions and Timing Issues

The most common culprit. Your test clicks a button before the JavaScript finishes loading, or checks a value before the API response arrives. The deadly pattern looks like this:

// ❌ FLAKY: Race condition waiting to happen

await page.click('#submit-button');

await page.click('#next-step'); // May execute before submit completes

// ✅ DETERMINISTIC: Wait for the operation to complete

await page.click('#submit-button');

await page.waitForResponse(resp =>

resp.url().includes('/api/submit') && resp.status() === 200

);

await expect(page.locator('#next-step')).toBeVisible();

await page.click('#next-step');The fix isn't just adding arbitrary sleep(5000) calls—that makes tests slower and still unreliable. Instead, wait for specific conditions that prove the system is ready.

2. State Pollution Between Tests

Tests pass when run individually but fail when run as a suite because they share state. Classic symptoms include:

- Database records created by test A interfere with test B's assertions

- LocalStorage or cookies persist across tests

- Global JavaScript variables leak between test contexts

- Test execution order determines pass/fail outcomes

// ❌ FLAKY: State leaks between tests

test('create user', async () => {

await createUser({ email: 'test@example.com' });

expect(await getUsers()).toHaveLength(1);

});

test('user list is empty', async () => {

expect(await getUsers()).toHaveLength(0); // FAILS if previous test ran

});

// ✅ DETERMINISTIC: Isolate each test

test.beforeEach(async ({ page }) => {

await resetDatabase();

await page.context().clearCookies();

await page.evaluate(() => localStorage.clear());

});

test('create user', async () => {

await createUser({ email: 'test@example.com' });

expect(await getUsers()).toHaveLength(1);

});

test('user list is empty', async () => {

expect(await getUsers()).toHaveLength(0); // ALWAYS passes

});3. Environment Dependencies

Your tests make assumptions about the environment that aren't always true. Network latency varies between dev machines and CI runners. Date/time calculations break across timezones. File paths differ between Windows and Linux.

// ❌ FLAKY: Depends on system timezone

test('shows correct date', async () => {

const date = new Date('2026-01-14T10:00:00Z');

expect(formatDate(date)).toBe('January 14, 2026'); // Breaks in non-UTC zones

});

// ✅ DETERMINISTIC: Use fixed timezone or relative assertions

test('shows correct date', async () => {

const date = new Date('2026-01-14T10:00:00Z');

expect(formatDate(date)).toMatch(/January 14, 2026/);

// Or better: mock the system clock

await page.clock.setFixedTime(new Date('2026-01-14T10:00:00Z'));

});4. External Service Volatility

Tests that hit real third-party APIs, payment gateways, or authentication providers inherit all their flakiness. Service downtime, rate limits, and network hiccups turn your deterministic code into a probabilistic nightmare.

// ❌ FLAKY: Depends on external API availability

test('fetches weather data', async () => {

const response = await fetch('https://api.weather.com/current');

expect(response.status).toBe(200); // Fails when API is down/slow

});

// ✅ DETERMINISTIC: Mock external dependencies

test('fetches weather data', async ({ page }) => {

await page.route('**/api.weather.com/**', route => {

route.fulfill({

status: 200,

contentType: 'application/json',

body: JSON.stringify({ temp: 72, conditions: 'sunny' })

});

});

const weather = await getWeather();

expect(weather.temp).toBe(72);

});Debugging Techniques: Finding the Root Cause

When you encounter a flaky test, resist the urge to immediately add retry logic or increase timeouts. Those are band-aids. Here's the systematic debugging approach:

1. Reproduce Consistently

Run the test 50-100 times to understand failure frequency. Playwright makes this easy:

# Run test 100 times and see failure rate

for i in {1..100}; do

npx playwright test flaky-test.spec.ts --reporter=line

done | grep -c "failed"

# Or use Playwright's repeat-each flag

npx playwright test --repeat-each=100 flaky-test.spec.tsIf it fails 5% of the time, you have flakiness. If it fails 50%, it's a different test environment issue. If it never fails locally but fails in CI, it's an environment dependency problem.

2. Enable Video and Trace Recording

Configure your test runner to capture artifacts only on failures:

// playwright.config.ts

export default defineConfig({

use: {

screenshot: 'only-on-failure',

video: 'retain-on-failure',

trace: 'retain-on-failure',

},

});Watch the video of a failed run. You'll often see exactly what happened: a button click that occurred before it was clickable, a network request that timed out, or an element that never appeared.

3. Analyze Network Timing

Most E2E flakiness is network-related. Enable detailed network logging:

// Log all network activity during test

test('flaky test', async ({ page }) => {

page.on('request', request => {

console.log('>>>', request.method(), request.url());

});

page.on('response', response => {

console.log('<<<', response.status(), response.url());

});

// Your test code here

});Look for patterns: Do requests complete out of order? Are responses slow? Does the failure correlate with specific API calls?

Architectural Patterns for Deterministic Tests

Once you've debugged the issues, apply these architectural patterns to prevent flakiness permanently:

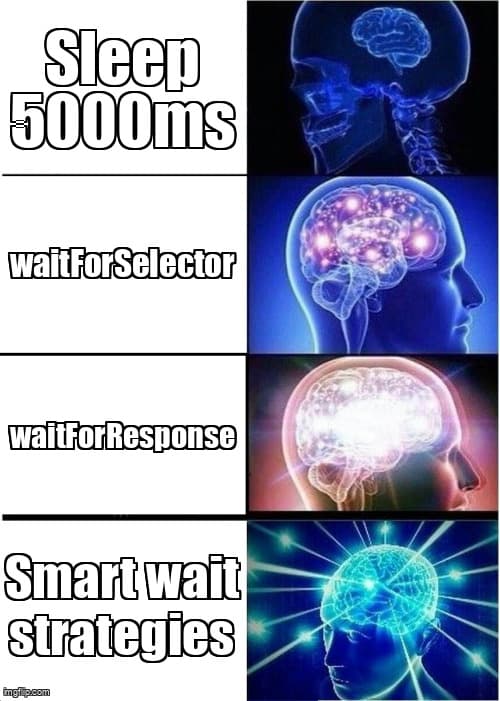

Pattern 1: Smart Wait Strategies

Replace arbitrary timeouts with condition-based waits:

// Wait for specific network activity to complete

async function waitForDataLoaded(page: Page) {

await page.waitForLoadState('networkidle');

await page.waitForSelector('[data-testid="content"]', { state: 'visible' });

await page.waitForFunction(() => {

const spinner = document.querySelector('.loading-spinner');

return spinner === null || spinner.style.display === 'none';

});

}

// Wait for API-driven state changes

async function submitFormAndWait(page: Page) {

const responsePromise = page.waitForResponse(

resp => resp.url().includes('/api/submit') && resp.ok()

);

await page.click('[data-testid="submit"]');

const response = await responsePromise;

// Verify the UI updated based on response

await expect(page.locator('.success-message')).toBeVisible();

}Pattern 2: Test Data Builders

Create isolated, predictable test data for each test:

// Seed unique test data per test run

class TestDataBuilder {

private testId = Date.now() + Math.random();

createUser(overrides = {}) {

return {

email: `user-${this.testId}@test.com`,

username: `testuser-${this.testId}`,

...overrides

};

}

async seedDatabase() {

await db.users.deleteMany({ email: { $regex: /test\.com$/ } });

return {

user: await db.users.create(this.createUser()),

admin: await db.users.create(this.createUser({ role: 'admin' }))

};

}

}

test('user can update profile', async ({ page }) => {

const builder = new TestDataBuilder();

const { user } = await builder.seedDatabase();

await page.goto(`/profile/${user.id}`);

// Test uses isolated data, no conflicts

});Pattern 3: Service Layer Mocking

Mock external dependencies at the network boundary:

// Create reusable mock handlers

class MockServiceLayer {

constructor(private page: Page) {}

async mockPaymentSuccess() {

await this.page.route('**/api/stripe/**', route => {

route.fulfill({

status: 200,

contentType: 'application/json',

body: JSON.stringify({

id: 'pi_test_123',

status: 'succeeded',

amount: 1000

})

});

});

}

async mockAuthService(userRole: string) {

await this.page.route('**/api/auth/**', route => {

route.fulfill({

status: 200,

body: JSON.stringify({ user: { role: userRole, id: '123' } })

});

});

}

}

test('checkout flow', async ({ page }) => {

const mocks = new MockServiceLayer(page);

await mocks.mockPaymentSuccess();

// Test is deterministic—no real payment API called

});Pattern 4: Idempotent Test Setup

Make setup operations safe to run multiple times:

// Idempotent setup: safe to run regardless of current state

async function ensureTestUserExists(email: string) {

const existing = await db.users.findOne({ email });

if (existing) {

await db.users.update({ email }, { verified: true, loginCount: 0 });

return existing;

}

return await db.users.create({ email, verified: true });

}

test.beforeEach(async () => {

// Always returns same result, no matter how many times it runs

const user = await ensureTestUserExists('test@example.com');

await loginAs(user);

});Metrics: Tracking and Preventing Flakiness

You can't improve what you don't measure. Implement flakiness tracking:

// Track test outcomes over time

interface TestResult {

testName: string;

status: 'passed' | 'failed';

duration: number;

timestamp: Date;

commitSha: string;

}

// Calculate flakiness rate

function calculateFlakiness(results: TestResult[]) {

const byTest = groupBy(results, r => r.testName);

return Object.entries(byTest).map(([testName, runs]) => {

const totalRuns = runs.length;

const failures = runs.filter(r => r.status === 'failed').length;

const flakiness = (failures / totalRuns) * 100;

return {

testName,

flakiness: `${flakiness.toFixed(1)}%`,

shouldInvestigate: flakiness > 0 && flakiness < 100

};

});

}Set up CI monitoring to automatically flag tests with 1-99% failure rates. These are your flaky tests. Tests that fail 100% have legitimate bugs. Tests that pass 100% are reliable.

Quarantine Strategy

When you identify flaky tests but can't fix them immediately, quarantine them:

// playwright.config.ts

export default defineConfig({

projects: [

{

name: 'stable',

testMatch: /^(?!.*\.quarantine\.spec\.ts$).*\.spec\.ts$/,

},

{

name: 'quarantine',

testMatch: /.*\.quarantine\.spec\.ts$/,

retries: 3, // Allow retries only for quarantined tests

},

],

});Run stable tests on every commit. Run quarantined tests nightly with retry logic. This prevents flaky tests from blocking PRs while still alerting you to real regressions.

The Flakiness Elimination Checklist

Before marking any test complete, verify it passes this checklist:

- Runs 100 times consecutively without failure on your local machine

- Uses explicit waits for all async operations (network, animations, state changes)

- Cleans up its own state in beforeEach/afterEach hooks

- Mocks external services that aren't under your control

- Uses unique test data that won't collide with parallel test runs

- Passes in CI environment with same success rate as local

- Works regardless of execution order when run with --shuffle flag

- Handles timing variations between fast dev machines and slower CI runners

Framework-Specific Best Practices

Playwright

- Use

page.waitForLoadState('networkidle')after navigation - Leverage auto-waiting: Playwright waits for elements to be actionable before clicking

- Use

test.describe.configure()to run tests in serial when needed - Enable

fullyParallel: trueto catch state pollution issues early

Cypress

- Chain commands properly:

cy.get().should().click()retries the entire chain - Use

cy.intercept()to stub network requests reliably - Avoid

cy.wait(5000)—usecy.wait('@apiAlias')instead - Clear cookies/localStorage in

beforeEach()hook

Selenium

- Use

WebDriverWaitwith explicit conditions, never implicit waits - Implement custom

ExpectedConditionsfor complex scenarios - Always call

driver.quit()in teardown to prevent session leaks - Use headless mode in CI but keep headed mode for debugging

Conclusion: The Trust Equation

Deterministic tests aren't just about eliminating false negatives—they're about building trust. When your test suite is reliable, developers stop ignoring failures. When tests are fast and stable, teams run them before every commit instead of after. When automation catches real bugs consistently, QA becomes a force multiplier instead of a bottleneck.

The path from flaky to deterministic isn't quick, but it's systematic. Identify root causes using video traces and network logs. Apply architectural patterns that eliminate timing dependencies and state pollution. Track metrics to prevent regression. Most importantly, never accept "it's just flaky" as a permanent state—every unreliable test is a solvable problem.

Start today: pick your flakiest test, run it 100 times, watch the failure recording, and apply the patterns above. Once you see a test go from 5% failure rate to 0%, you'll never accept flakiness again.

Need Help Building Deterministic Test Suites?

Desplega AI helps teams eliminate flaky tests and build reliable CI/CD pipelines. Our agentic workflow orchestration ensures your test automation is deterministic, fast, and trustworthy.

Explore Desplega AI →